Understanding Node.js - Memory management

Introduction

This is the fourth article in our series on understanding the architecture of Node.js. In this post, we explore how Node.js manages memory, discuss methods to detect memory leaks, and review some of the most common issues that can cause them.

While this is the final article focusing on the core architecture of Node.js, it is by no means the last one in our series about Node.js and its ecosystem.

Memory management

Memory management involves allocating and deallocating memory. In the case of a Node.js application, this responsibility lies with the V8 engine, which handles it automatically. While the process is largely automated, it is essential to understand how it works to write programs that assist the engine in managing memory efficiently and avoiding memory leaks.

Memory allocation

Node allocates memory in the following area:

Stack

The stack is a dedicated memory area used for managing local variables, function calls, and the execution context of a program. It is organized as a Last In, First Out (LIFO) data structure, which ensures efficient management of data (all operations are performed at the top of the stack).

The stack is automatically managed, meaning:

- Memory for variables is allocated when a function is called.

- The memory is automatically reclaimed when the function ends or the program exits.

- This makes the stack efficient for short-lived, scoped variables.

Heap

The heap is a large block of memory reserved for dynamically allocated data such as objects, arrays, and closures. It is divided into the following spaces:

Young Generation Space: A smaller section of the heap designed for newly created objects that are likely to have a short lifespan. This space typically starts at 8 MB (on 32-bit systems) or 16 MB (on 64-bit systems).Old Generation Space: A larger portion of the heap allocated for objects that need to persist longer in memory. It is significantly bigger compared to the Young Generation Space.Code Space: A dedicated area in the heap used for storing the code that is being executed.Large Object Space: A specialized space for objects that are too large to be efficiently stored in either the Young or Old Generation spaces. Allocating such objects here avoids the high performance cost of copying them during garbage collection.

Memory releasing

Memory releasing is the process of removing unused objects from memory to reclaim space for new ones. In Node.js, this task is managed by a mechanism called the Garbage Collector.

The Garbage Collector often operates during idle phases when the CPU has fewer tasks to handle. Its operation is divided into two phases:

Scavenging (Minor GC)– A fast process that scans only the young generation of objects, removes unused ones, and promotes longer-lived objects to the old generation. If an object survives two or more scavenging cycles, it is moved to the old generation space.Mark-Sweep (Major GC)– A slower garbage collection process that scans the old generation. It begins by starting with the root object, traversing all referenced objects, and marking them during the first phase (mark). In the second phase (sweep), it removes all unmarked objects.

Consider following code:

class Engine {

constructor(public power: number) {

this.power = power;

}

}

class Car {

private engine: Engine;

constructor(public model: string, private power: number) {

this.model = model;

this.engine = new Engine(power);

}

public replaceEngine(newPower: number) {

this.engine = new Engine(newPower);

}

}

And some code that uses the classes:

const car1 = new Car(‘car1’, 200)

const car2 = new Car(‘car2’, 400)

const car3 = new Car(‘car3’, 150)

we could visualize the object graph as below:

And now we will call:

car2.replaceEngine(200)

car3 = null

As a result the graph will change to:

For the simplicity, assume that all objects are located now in Old Generation Space. In that case, they will be a handled by Mark-Sweep (Major GC) phase. In the first phase (mark), the algorithm will start with the root object, traverse all referenced objects and marking them. So, the graph could look like:

All objects referenced from root were marked and colored with blue. The second phase (sweep), will find all unmarked object (green ones) and remove them to reclaim space. After that, the objects graph will look following:

However, in some cases, objects that are no longer needed may still be referenced by the root, even though they could be safely removed to reclaim space. This situation results in a phenomenon known as a memory leak.

Memory leaks

When a memory leak occurs, your program starts occupying more and more memory, causing the overall performance to degrade. Eventually, the program may crash due to insufficient space for new objects, resulting in an "Out of Memory" error. Below are some common bad coding practices that can lead to memory leaks:s

Using global variables

Global variables are always referenced by a root object and stay in memory as long as the main program is running.

type CacheValue = {

timestamp: number;

value: number;

};

const cache: Record<string, CacheValue> = {};

app.get("/add/:a/:b", (req, res) => {

const { a, b } = req.params;

const cacheKey = `${a}_${b}`;

if (cache[cacheKey]) {

const { value } = cache[cacheKey];

res.send(`Cached result: ${value}`);

return;

}

const value = parseInt(a) + parseInt(b);

cache[cacheKey] = {

timestamp: Date.now(),

value,

};

res.send(`Calculated result: ${value}`);

});

In the above example, the cache only appends new values, leaving all older or unused values in memory. These values will not be removed by the garbage collector. As a result the cache will start occupying more and more memory.

Mitigate strategy

- Proactively manage the lifecycle of global variables: For caches, consider implementing strategies such as time-based data removal or setting a maximum size for the cache to prevent unbounded memory growth.

Closure based memory leak

The inner function maintains a reference to data from outer function, preventing garbage collection even if the function is no longer needed.

function generateArray(length: number) {

const outerData = new Array(length).fill("*");

return function processData() {

console.log(`Generated ${outerData.length} elements`);

};

}

function closureLeak() {

const functions = [];

for (let i = 0; i < 1000; i++) {

const largeArrayGenerator = generateArray(10_000);

functions.push(largeArrayGenerator);

}

console.log("Functions created:", functions.length);

}

The closure in the generateArray function captures the entire outerData array, even though the inner processData function does not utilize it directly. This prevents the array from being garbage collected, leading to unnecessary memory consumption.

Mitigation strategy

- Prevent memory leaks caused by lingering references in closures: provide a mechanism to release or nullify the references when they are no longer needed. This ensures that large data structures can be garbage collected.

function generateArraySafe(length: number) {

let outerData = new Array(length).fill("*");

function processData() {

console.log(`Generated ${outerData.length} elements`);

}

function cleanup() {

outerData.length = 0;

}

return { processData, cleanup };

}

Timers/intervals based memory leak

Timers and intervals in Node.js can cause memory leaks if not properly managed. When a timer (setTimeout) or interval (setInterval) is started but not cleared after completing its work, the associated callback function and its resources can remain in memory indefinitely. This prevents the garbage collector from freeing them, leading to potential memory leaks. This issue is particularly significant when the callback function captures resources from its enclosing scope, resulting in a closure-based memory leak.

setInterval(() => {

// Do something repeatedly

}, 1000);

// If this interval is never cleared, the callback stays in memory.

Mitigation strategy

- Clear timers and intervals when they are no longer needed: Use

clearTimeoutorclearIntervalto stop the timer or interval once its purpose is served.

const intervalId = setInterval(() => {

// Do something repeatedly

}, 1000);

// release memory when work done

clearInterval(intervalId)

- Minimize captured resources in closures: Avoid referencing variables or functions from the outer scope in timer or interval callbacks, unless absolutely necessary, to reduce the risk of closure-based memory leaks.

Unclosed event listeners

The EventEmitter class in Node.js has a default limit of 10 listeners per event. Adding more listeners without increasing this limit triggers the following warning:

(node:57) MaxListenersExceededWarning: Possible EventEmitter memory leak detected

This warning typically arises when you add listeners repeatedly, such as within a loop or other event-driven processes, without removing them when they are no longer needed.

For example:

import EventEmitter from "events";

class MyEmitter extends EventEmitter {}

const emitter = new MyEmitter();

for (let i = 0; i < 15; i++) {

emitter.on("data", (data) => console.log(data));

}

// Warning: MaxListenersExceededWarning

Mitigation strategy

- To avoid memory leaks, always remove listeners when they are no longer needed. Use either:

- emitter.off("data", listener) (preferred modern API)

- emitter.removeListener("data", listener) (older API, still valid)

Example:

const listener = (data: string) => console.log(data);

emitter.on("data", listener);

// When the listener is no longer needed:

emitter.off("data", listener);

- Increase Listener Limit (If Necessary). If you intentionally need more than 10 listeners, increase the limit using

setMaxListeners():

emitter.setMaxListeners(20);

However, make sure you increases the limit in order to handle all required listeners, not to avoid a warning if you do not expect a higher number.

- Use once for One-Time listeners. For events that need handling only once, use

emitter.once()instead ofemitter.on(). For example:

emitter.once("data", (data) => console.log(data));

The above will remove the listener automatically after execution.

Detecting memory leaks

Node.js provides built-in tools to help analyze and detect memory leaks in your application. These tools allow you to, for instance, trace garbage collection statistics to monitor how memory is managed or generate heap dumps to analyze memory usage over time.

Tracing garbage collector

In order to trace garbage collector you can start a NodeJS program with --trace-gc argument. Create a following program that has a memory leak.

import express from "express";

const app = express();

const port = 3000;

const memoryLeakArray = [];

function leakMemory() {

const hugeObject = {

data: new Array(100_000).fill("*"),

};

memoryLeakArray.push(hugeObject);

}

// generate memory leak every 5 seconds

setInterval(leakMemory, 5 * 1000);

app.listen(port, () => {

console.log(`Example app listening on port ${port}`);

});

and start the program with node --trace-gc dist/index.js. After a while you should see on the console GC output:

[40837:0x148008000] 50 ms: Scavenge 4.6 (4.8) -> 4.1 (5.8) MB, 0.3 / 0.0 ms (average mu = 1.000, current mu = 1.000) allocation failure;

[40837:0x148008000] 67 ms: Scavenge 5.4 (6.6) -> 4.9 (7.4) MB, 0.3 / 0.0 ms (average mu = 1.000, current mu = 1.000) allocation failure;

[40837:0x148008000] 98 ms: Scavenge 5.9 (7.6) -> 5.3 (7.6) MB, 0.2 / 0.0 ms (average mu = 1.000, current mu = 1.000) allocation failure;

[40837:0x148008000] 119 ms: Scavenge 6.2 (7.6) -> 5.7 (10.4) MB, 0.3 / 0.0 ms (average mu = 1.000, current mu = 1.000) allocation failure;

[40837:0x148008000] 149 ms: Scavenge 8.2 (10.5) -> 7.2 (11.3) MB, 0.4 / 0.0 ms (average mu = 1.000, current mu = 1.000) allocation failure;

[40837:0x148008000] 165 ms: Scavenge 8.6 (11.3) -> 7.8 (12.0) MB, 0.5 / 0.0 ms (average mu = 1.000, current mu = 1.000) allocation failure;

Example app listening on port 3000

[40837:0x148008000] 8286 ms: Mark-sweep (reduce) 9.4 (12.8) -> 7.4 (9.9) MB, 2.7 / 0.0 ms (+ 6.4 ms in 23 steps since start of marking, biggest step 3.6 ms, walltime since start of marking 10 ms) (average mu = 1.000, current mu = 1.000) finalize incremental marking via task; GC in old space requested

[40837:0x148008000] 8898 ms: Mark-sweep (reduce) 7.4 (9.9) -> 7.4 (9.4) MB, 3.5 / 0.0 ms (+ 7.0 ms in 40 steps since start of marking, biggest step 0.4 ms, walltime since start of marking 11 ms) (average mu = 0.983, current mu = 0.983) finalize incremental marking via task; GC in old space requested

[40837:0x148008000] 15175 ms: Scavenge 8.2 (10.2) -> 8.2 (10.2) MB, 1.2 / 0.0 ms (average mu = 0.983, current mu = 0.983) allocation failure;

[40837:0x148008000] 20177 ms: Scavenge 8.9 (11.0) -> 8.9 (11.0) MB, 2.9 / 0.0 ms (average mu = 0.983, current mu = 0.983) allocation failure;

Based on the first line the values corresponds to:

[40837:0x148008000]- PID of the running process and JS heap instance50 ms- the time since the process started in msScavenge- type/phase of a garbage collector4.6- heap used before GC in MB(4.8)- total heap before GC in MB4.1- heap used after GC in MB(5.8)- total heap after GC in MB0.3 / 0.0 ms (average mu = 1.000, current mu = 1.000)- time taken to run GCallocation failure- Reason for GC

Using the GC output you can see how your program manages the memory.

Analyzing of a heapdump

However, if it turns out that your program has a memory leak, you can use embedded tools to dump the heap to a file over time and perform an analysis to identify the cause.

Using v8 module you can do a heap snapshot. Let's extend our previous program with the following functionality:

import * as v8 from "v8";

//

function createHeapSnapshot() {

const snapshotStream = v8.getHeapSnapshot();

const fileName = `${Date.now()}.heapsnapshot`;

const fileStream = fs.createWriteStream(fileName);

snapshotStream.pipe(fileStream);

console.log(`Heap snapshot created: ${fileName}`);

}

// Run the function every 5 seconds

setInterval(leakMemory, 5 * 1000);

// Create heap snapshot every 10 seconds

setInterval(createHeapSnapshot, 10 * 1000);

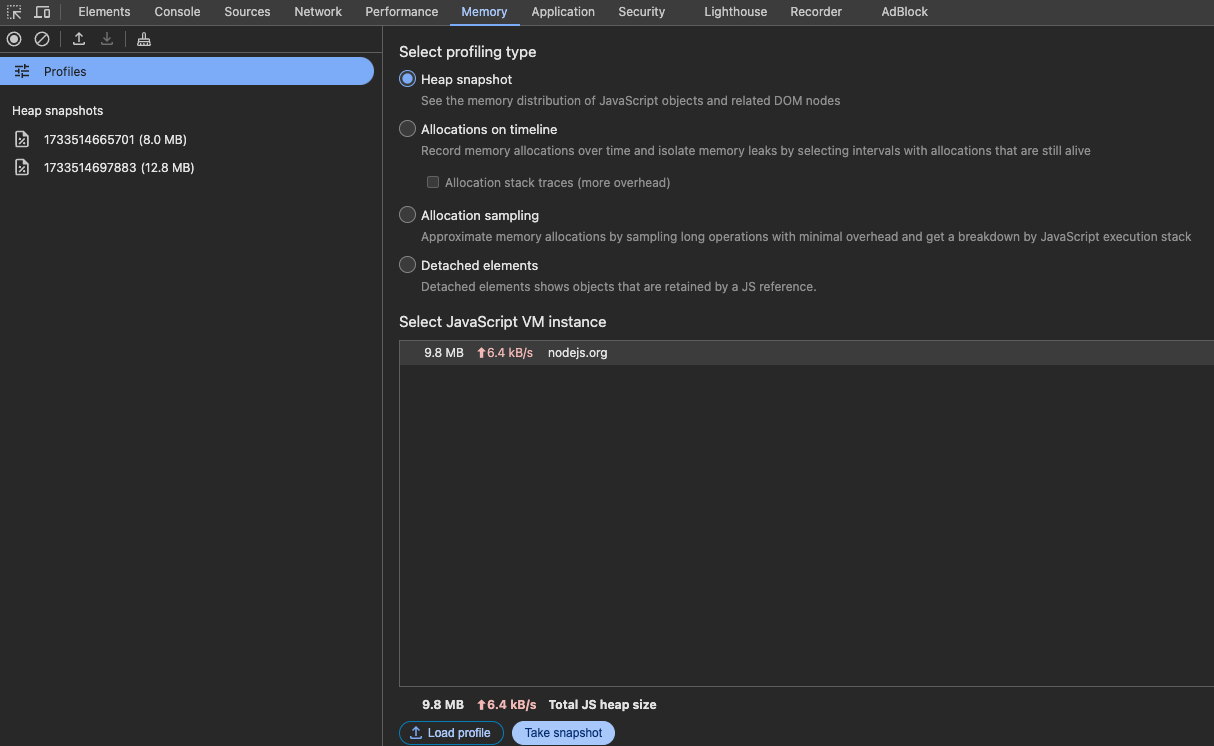

And run program for a while (to have at least two heap snapshots). If you have two heap snapshots available, you can now analyze them. We will use Chrome internat browser (as it uses also v8 engine and has built in tool for heap analysis).

- Open Chrome DevTools

- Navigate to the Memory tab

- In the Memory tab, click the Load profile button and select first (older) file with heap snapshot

- Load the second file

- Finally you should end up with similar view:

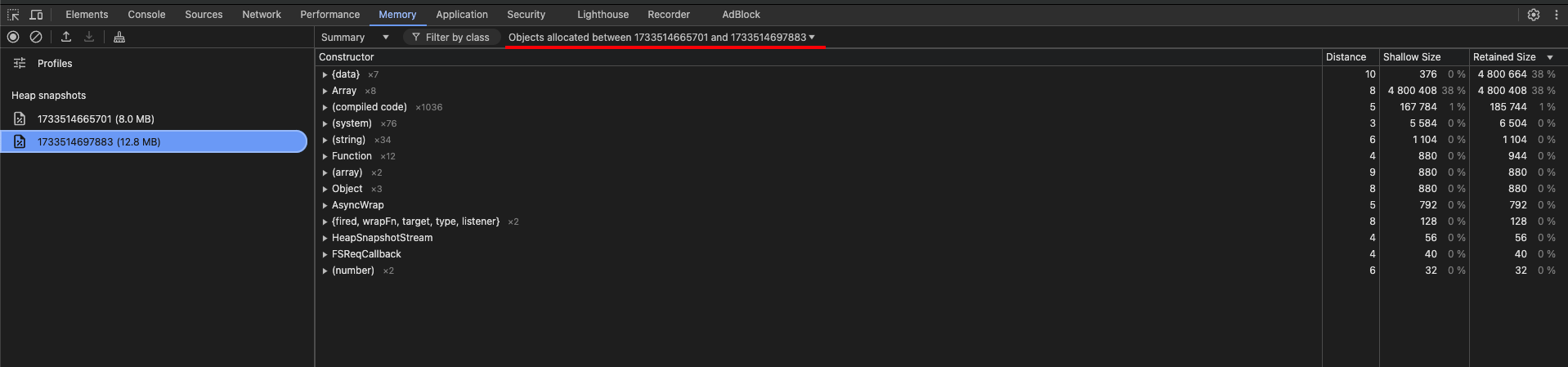

- There are numerous options available for analyzing the data; however, we will focus on comparing objects created between the two points in time when the files were generated. To proceed, please select the second file, ensure the "Summary" option remains selected, and choose "Objects allocated between xxx and yyy," as shown in the image below:

The aspect that should catch our attention is the very first two lines of the report. While the Shallow Size of the data object does not occupy much space, the Retained Size is significant and is almost equal to the Shallow and Retained Size of the second entry (Array).

The Shallow Size indicates the amount of memory used directly by an object itself, excluding any references to other objects. The Retained Size represents the total memory that would be freed if a given object were garbage collected, including the memory used by all objects it keeps alive (directly or indirectly).

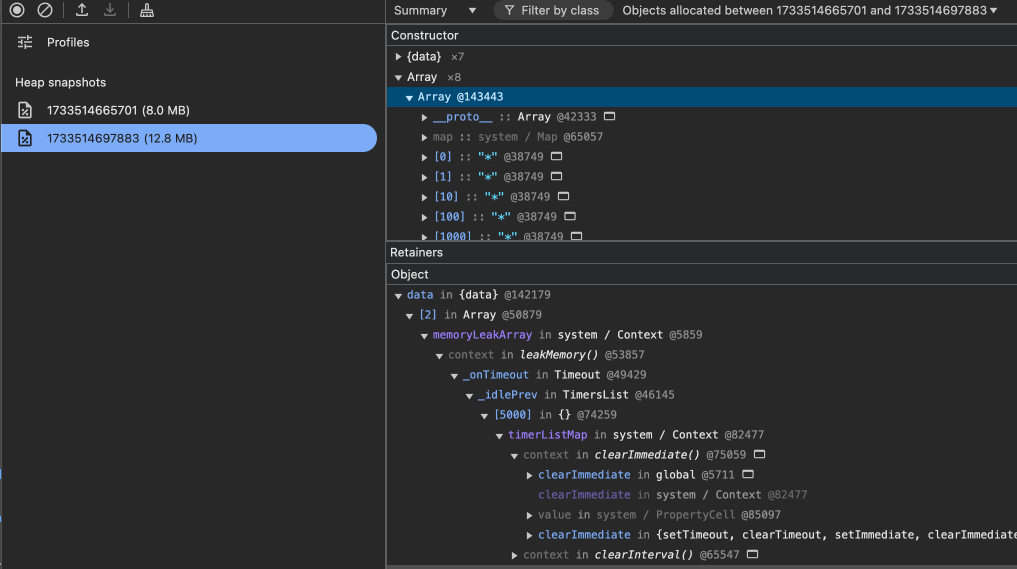

Based on this, we can assume that the data object may hold references to other objects, potentially causing memory usage to grow. Most likely, it is an array. We can now select the second row and expand it to gather more details, such as the object's name and where it is allocated.

Expanding the object reveals that it contains the * values. It is an array named memoryLeakArray, created and populated within the context of the leakMemory function.

We can now examine our code to identify and resolve the cause of the memory growth or leak. In this case, the issue was straightforward to locate because the code was simple. However, in a real-world scenario, it can be more complex. Nevertheless, the steps we followed will help you better understand memory allocation in your program.

Summary

This concludes the series of articles on the core architecture of Node.js. I hope you found this article, along with the entire series, useful. The goal was to provide you with a better understanding of CPU and memory management in Node.js applications, enabling you to create robust and efficient applications.