Data Engineering Fundamentals

Introduction

This is the first post in a series on Data Engineering with AWS. In this post, we will cover some basic terminology related to data engineering that can be useful as a foundation before diving into AWS services related to data management.

Types of data

In data engineering, the types of data we can deal with are usually categorized into one of the main type:

-

structured- this is highly organized data, stored in predefined format with clear and consistent schema. This type of data can be easily searchable, indexed and the relation between data is well defined. Usually, this type of data is represented by relational databases, spreadsheets or some reports (financial, sales, transaction, inventory, etc). -

semi-structured- this type of data does not fit into a regular and well defined schema, but still has some patterns, tags or metadata that describe records. The structure is flexible and can evolve over time. Usually, this type of data is represented by JSON/XML files, NoSQL databases, logs or emails. -

unstructured- data that has no predefined format or structure, making it the most difficult to store or analyze. This type of data is usually rich in information, but requires significant effort to process it to extract what's relevant. Usually, this can be represented by web pages, multimedia files, documents, social media posts, pdf documents.

5 V's of Big Data

The data that you, as a data engineer, will be handling can be described by 5 characteristics:

-

Volume- refers to amount of data that needs to be ingested, transformed and stored from different sources (IoT devices, sensors, transactions, logs, applications, etc.) -

Velocity- refers to the speed at which data is generated and needs to be processed. When dealing with velocity, we can distinguish three main categories: real time, near real time (usually it is when a delay is between 1m-15m) or batch processing (when the delay is above 1h). -

Variety- refers to the diversity of data type (structured,semi-structuredorunstructured) -

Veracity- refers to the accuracy and reliability of data. Veracity addresses the challenges of data quality, inconsistencies, which can impact the validity of analysis -

Value- refers to the insights and benefits that can be extracted from data. It emphasizes the tangible business outcomes and actionable intelligence that result from processing and transforming ingested raw data.

Based on these characteristics, you can design and implement a data pipeline that aligns with AWS best practices, ensuring scalability, efficiency, and cost-effectiveness.

Date Lake vs Data Warehouse

Date Lake and Data Warehouse are storage for data with different characteristics and needs.

-

Date Lake- a cost-effective storage solution for massive amounts of raw, unprocessed data of all types (structured, semi-structured, and unstructured). It applies a schema only when the data is read (schema-on-read). Data lakes are designed for big data analytics, including machine learning, artificial intelligence, and advanced data exploration. Minimal processing is required before data is stored. Common platforms providing data lake functionality include Hadoop, Amazon S3, Azure Data Lake, and Google Cloud Storage. -

Data Warehouse- a more expensive storage solution designed for processed and structured data with a well-defined schema. It focuses on optimized query performance rather than raw data storage. Data is typically transformed and processed before being loaded (ETL: Extract, Transform, Load). Data warehouses are ideal for performing historical queries and generating business insights. Common platforms providing data warehouse functionality include traditional database management systems (DBMS), Amazon Redshift, Google BigQuery, Snowflake or Microsoft Azure Synapse.

Data Mesh

Data Mesh is an emerging paradigm for managing and handling data, where data is treated as a product. This means that each set of data, such as a dataset or a data pipeline, is owned and maintained by a dedicated team responsible for its quality, security and availability. By adopting this approach, organizations aim to decentralize data ownership and distribute responsibilities across different teams (usually aligned with specific business domains).

Data Lineage

Data Lineage refers to the visual representation that traces the flow of data from its source to its destination, including all transformations applied along the way. The primary goal of data lineage is to help organizations maintain, monitor, debug, and validate their data pipelines. It provides a clear understanding of where the data originates, how it is transformed, and how it is ultimately used. This visibility can aid in troubleshooting transformation failures, monitoring pipeline efficiency, and identifying potential sensitive data leaks.

In AWS data lineage can be built sing Neptune, AWS Glue and Spline agent.

Data Sampling Techniques

Data Sampling is a technique used by data engineers to create a representative subset of a large and complex dataset while preserving the same characteristics and patterns as the original. This technique is particularly useful for preparing testing datasets that help reduce storage and processing costs while validating and testing data pipelines.

There is plenty of sampling methods, but we will explore a few based on the probability:

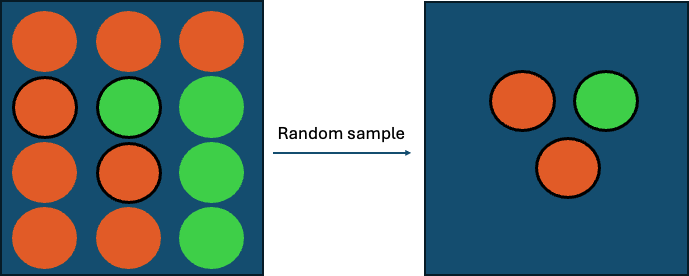

Simple random sampling- every member of a population has an equal chance of being selected. In order to conduct this type of sampling, tools such as random number generators can be used.

Systematic sampling- in systematic sampling, every member of a population is assigned a consecutive number. To conduct this type of sampling, you randomly select a starting point within the first interval (based on the population size and the required sample size) and then select individuals at regular intervals.

Suppose you have a population of 30 items and need a sample of 5 items. First, calculate the sampling interval: divide the population size (30) by the sample size (5), which gives an interval of 6. Next, randomly choose a starting point between 1 and 6. If the starting point is 4, your sample would include the following items: 4, 10, 16, 22, and 28.

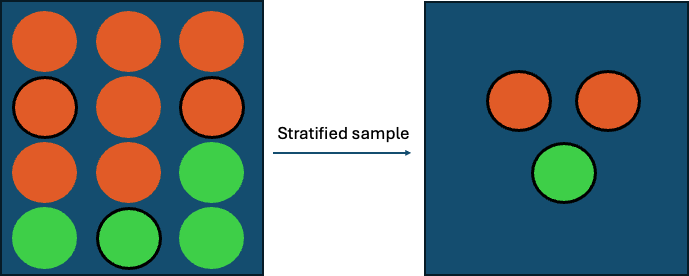

Stratified sampling- in stratified sampling, the population is divided into groups, or strata, based on specific characteristics. The number of individuals selected from each stratum is determined by the size of the strata relative to the total population. Individuals are then selected from each stratum using random or systematic sampling to create the final sample.

Suppose you have a population of 30 items, consisting of 20 orange items and 10 blue items, and you need a sample of 5 items. First, divide the population into two strata: 20 orange items and 10 blue items. Next, calculate how many items to select from each stratum. Based on the proportion of each stratum in the total population, select 3 items from the orange group and 2 items from the blue group.

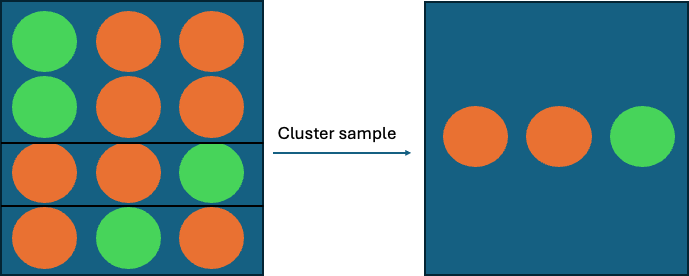

Cluster sampling- in cluster sampling, the population is divided into subgroups, or clusters, where each cluster has characteristics that closely resemble those of the overall population. Instead of selecting individuals from each cluster, entire clusters are randomly chosen, and all members within the selected clusters are included in the sample.

Data Skew

Data Skew refers to a situation where data (e.g., traffic or load) is unevenly distributed across nodes, partitions, clusters, or similar structures. This phenomenon is sometimes referred to as the "hot partition" or "celebrity problem." It often occurs when the chosen key pattern for distributing data is not optimal.

Data skew can be either a temporary issue—such as high traffic caused by backfilling data when an IoT device that was offline for an extended period comes back online—or a persistent problem due to structural or design flaws in the distribution strategy.

Addressing Data Skew highly depends on the root cause of the problem. Here are some example solution that can be applied in certain cases:

Salting keys- adding a random or calculated prefix (salt) to the keys in the dataset to distribute data more evenly across partitions. This ensures that dominant data is spread across different clusters or partitions, preventing bottlenecks and improving load balancing.Range Partitioning- using custom logic to explicitly specify ranges for partitioning based on data distribution. This approach helps distribute hot keys across a wider range of partitions, ensuring better load balancing.Scaling Horizontally- Increasing the number of nodes or partitions to reduce the load on individual nodes.

Data Validation and Profiling

Data Validation and Profiling is a process that ensures our data is complete and can be used in analysis. There are several dimensions we can use to validate and profile our data:

Completeness- validates whether all required data is present and ensures that no critical information is missing. Missing data can result in invalid or misleading insights, leading to inaccuracies in reports and data analysis. Completeness validation covers counting null values, checking missing data or calculating percentage of missing values.Consistency- validates whether data from different sources is consistent across the entire dataset. Inconsistent data can cause confusion and negatively affect insights. Consistency validation involves cross-checking data across sources to ensure the same target scale or standard is used (e.g., Celsius for temperature across all data sources).Accuracy- validates whether the data is accurate, reliable, and correctly represents what it is supposed to. Inaccurate data can lead to poor decisions and false insights. Accuracy validation involves cross-checking the data against multiple sources and verifying it against known standards and rules.Integrity- ensures that data maintains its relationships and consistency throughout the entire data pipeline. Integrity can be enforced by preserving relationships between data using foreign keys or other database techniques.

Summary

In this post, we covered fundamental terminology related to data engineering. In the upcoming posts, we will delve deeper into AWS services, with a focus on designing and implementing data pipelines while adhering to well-architected principles. Stay tuned for more insights!