Partition projection with Amazon Athena

Introduction

In this post, we will explore different options for querying data using Amazon Athena. We will examine how Athena works, the partitioning mechanism it uses, and its correlation with the AWS Glue Data Catalog.

Amazon Athena and Glue Data Catalog

Amazon Athena is a serverless, interactive query tool that allows you to analyze data stored directly in Amazon S3 using SQL. It is built on a distributed query engine, which enables fast querying of large datasets without the need to manage infrastructure. Since Athena is a serverless service, you pay only for the queries executed and the amount of data scanned on S3.

Athena can query structured, semi-structured, and unstructured data in formats such as CSV, TSV, JSON, Parquet, Avro, or ORC. The stored data can be compressed using common formats like bzip, Snappy, or others. This data is typically organized into partitions, and queries can directly access these partitions for better performance.

Partitioning is a performance optimization technique that helps reduce the time and cost of executing queries. It directs the query engine to look for specific data segments without requiring a full data scan.

Athena also needs to know the schema of the data being queried. This is done by referencing the appropriate table in the AWS Glue Data Catalog.

Scheme definition and discovery

AWS Glue provides a crawler service that can scan files stored in S3 to infer the schema and create or update corresponding tables in the Data Catalog. This means you can automate the schema definition process instead of doing it manually. I covered this functionality in my previous posts. In this one, I would like to explore different options for defining schemas and partitions manually.

To define a schema and partitions, you need to run a DDL (Data Definition Language) query in the AWS Athena Console. The syntax for the query is as follows:

CREATE EXTERNAL TABLE IF NOT EXISTS your_table_name (

column1 type1,

column2 type2

...

)

PARTITIONED BY (partition_column partition_type)

ROW FORMAT SERDE 'data_serializer/deserializer'

WITH SERDEPROPERTIES (

'property_name' = 'property_value'

)

LOCATION 's3://your-data-bucket/data-folder/';

PARTITIONED BY: Used to define partitions for your dataset.ROW FORMAT SERDE: Optional and is used when formats other than TEXT are used. The serializer/deserializer must be selected based on the input data format.SERDEPROPERTIES: Optional and can be used to pass additional parameters to the serializer/deserializer library.LOCATION:Specifies the data location in S3, formatted as s3://your-data-bucket/data-folder/.

For the other possible options, please take a look in the official documentation

The above DDL will create a schema definition. However, for Athena to query the data, we also need to update the information about available partitions. This can be done either by running an AWS Glue crawler or by executing DML (Data Manipulation Language) queries. The relevant queries are:

- ALTER TABLE ADD PARTITION - for non-Hive compatible partitions

- MSCK REPAIR TABLE - for Hive compatible partitions

Wondering what makes partitions Hive-compatible or not? We'll cover that shortly.

Now, it is time to make our hands dirty and run real examples.

Lets start with preparing example data sets. It will be small, but the size does not matter here as we want to explore capabilities of Athena only.

Create two files with some JSON content in it. In my case the files are:

data1.txt:

{"color": "red", "message": "The color is red", "value": 100}

{"color": "pink", "message": "The color is pink", "value": 222}

{"color": "yellow", "message": "The color is yellow", "value": 30}

data2.txt:

{"color": "red", "message": "The color is red", "value": 130}

{"color": "pink", "message": "The color is pink", "value": 252}

{"color": "yellow", "message": "The color is yellow", "value": 44}

{"color": "blue", "message": "The color is blue", "value": -34}

Please note that each line is a separate JSON record (no separator between records). Compress them with any type supported by AWS. I will use GZIP.

Go to AWS S3 console and create two buckets. One will be used to store our data and the other one by Athena to store query results. Once the buckets are ready, go to AWS Athena console and configure the second bucket to be used according to manual.

Now, follow the steps:

- In the data bucket create following structure:

s://your-bucket/1/year=2024/month=10/day=7and uploaddata1.txt.gzfile there. - Go to Athena console and create a schema for a data we uploaded:

CREATE EXTERNAL TABLE data_1 (

color string,

message string,

value integer

)

PARTITIONED BY (year integer, month integer, day integer)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION 's3://athena-exampledata-20241007/1';

The above has created a schema for our data. The schema sie defined as data_1 table and is partitioned by year, month and day value.

- Run the query to list the data we uploaded.

SELECT * FROM data_1 WHERE year=2024 AND month=10 AND day=7

As you might expect, we have no results. This is because we have not yet created or refreshed the partition information. As mentioned earlier, this is crucial for Athena to know where to look for the data. To resolve this, we need to run one of the queries defined above.

Now, let's explain what a Hive-compatible schema is. When we define a key for our data using the format key1=value1/key2=value2, we are following the Hive naming convention. This convention helps organize the data by defining partition names along with their corresponding values as part of the key.

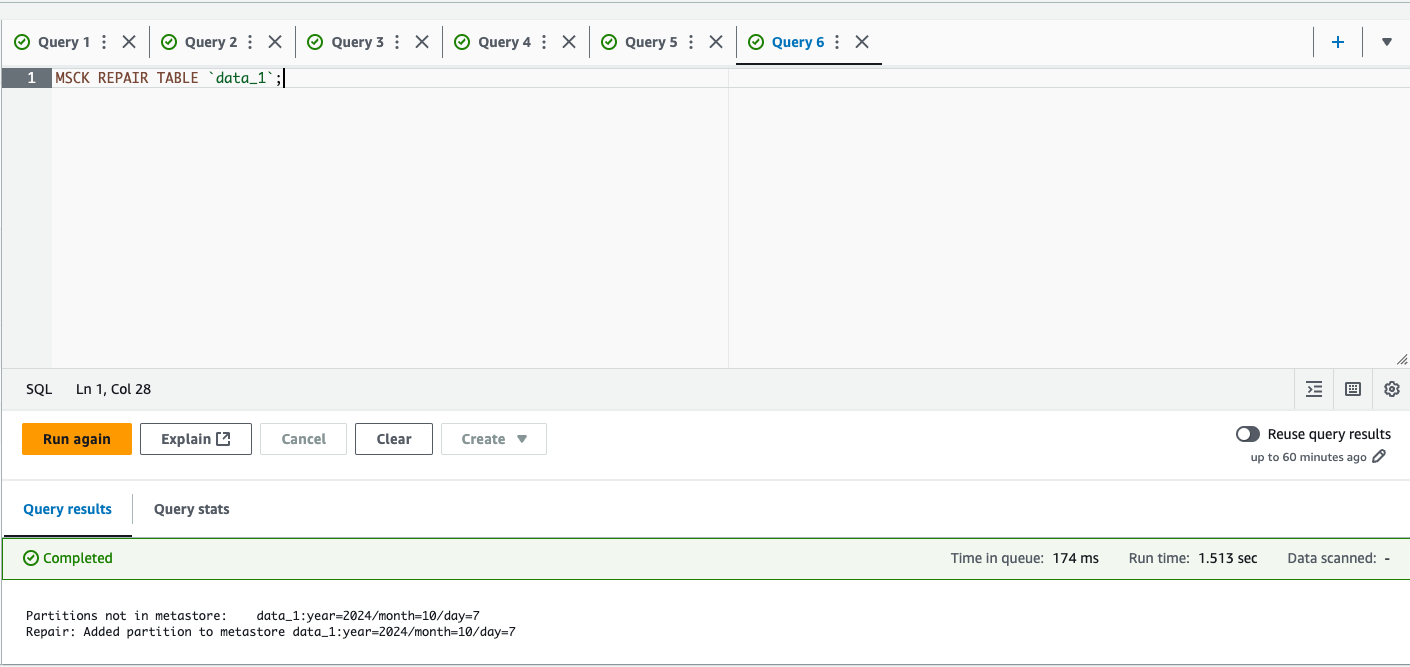

So, in order to fix the missing part we need to run MSCK REPAIR TABLE data_1;. When a query completed, we should see the information about updated metadata.

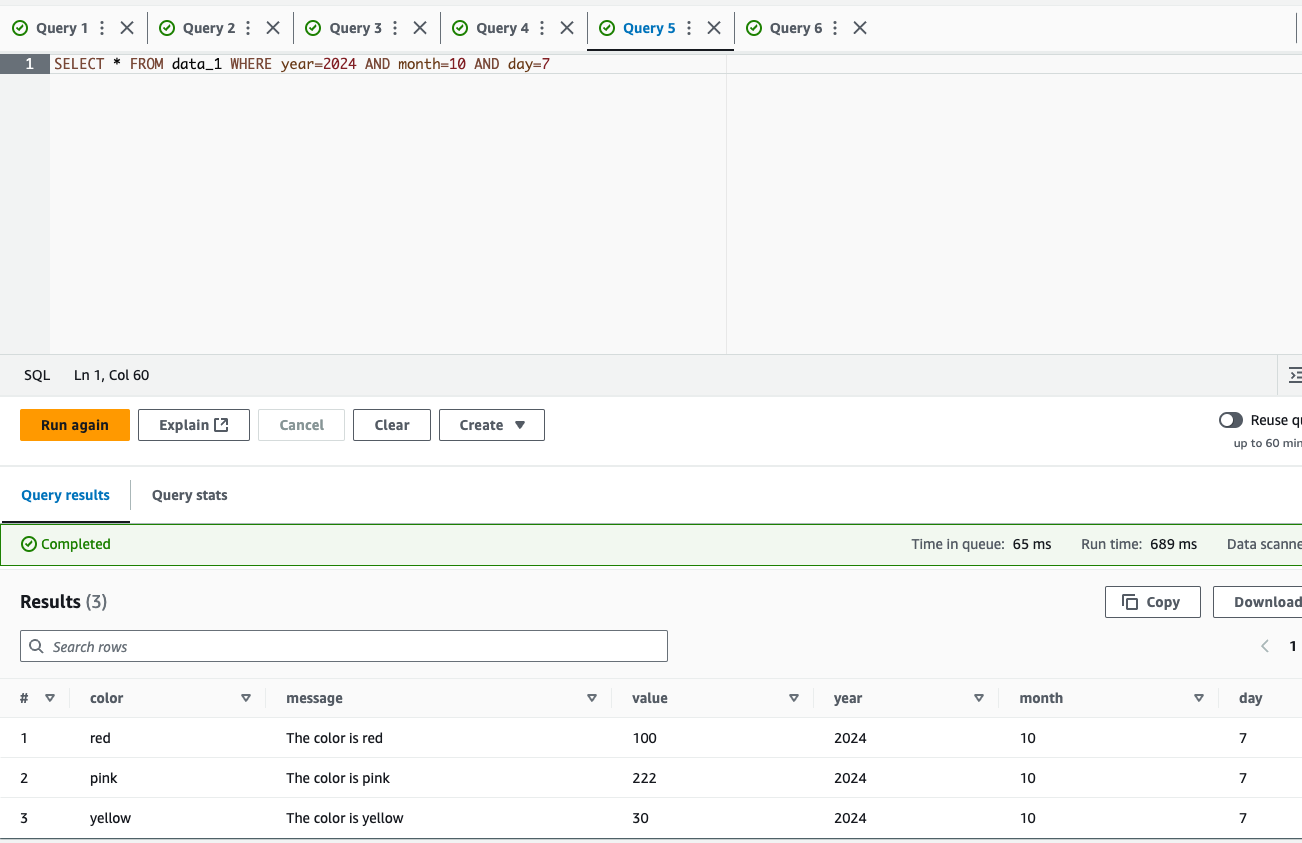

And now we can re-run the query. This time we should see the results.

-

In the next step, create a new partition

s://your-bucket/1/year=2024/month=10/day=8and upload second file there. -

Run the query to see the results:

SELECT * FROM data_1 WHERE year=2024 AND month=10 AND day=8

Since the data has appeared in a new partition that Athena does not yet recognize, we need to refresh the metadata by running the MSCK REPAIR TABLE data_1; query. This will update the partition information. After repairing the table, re-run the query, and the results should now be available.

As you can see, updating the schema and partitions for Hive-compatible data is quite straightforward. Now, let's explore how to handle this for a non-Hive-compatible structure.

we will start from the scratch.

- Go to S3 console and create following structure:

s://your-bucket/2/2024/10/7and uploaddata1.txt.gzfile there. - Create the table that reflects the schema of our data:

CREATE EXTERNAL TABLE data_2 (

color string,

message string,

value integer

)

PARTITIONED BY (year integer, month integer, day integer)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION 's3://athena-exampledata-20241007/2';

- Run the query to list the data we uploaded.

SELECT * FROM data_2 WHERE year=2024 AND month=10 AND day=7

As you may expect, there is no results available. The reason is the same as in previous one. We need to update the metadata. But this time, running MSCK REPAIR TABLE data_2 would not work as the partition has a different structure, which is not Hive compatible. In this case, we need to use the second query:

ALTER TABLE data_2 ADD PARTITION (year = 2024, month = 10, day = 7) LOCATION 's3://athena-exampledata-20241007/2/2024/10/7'

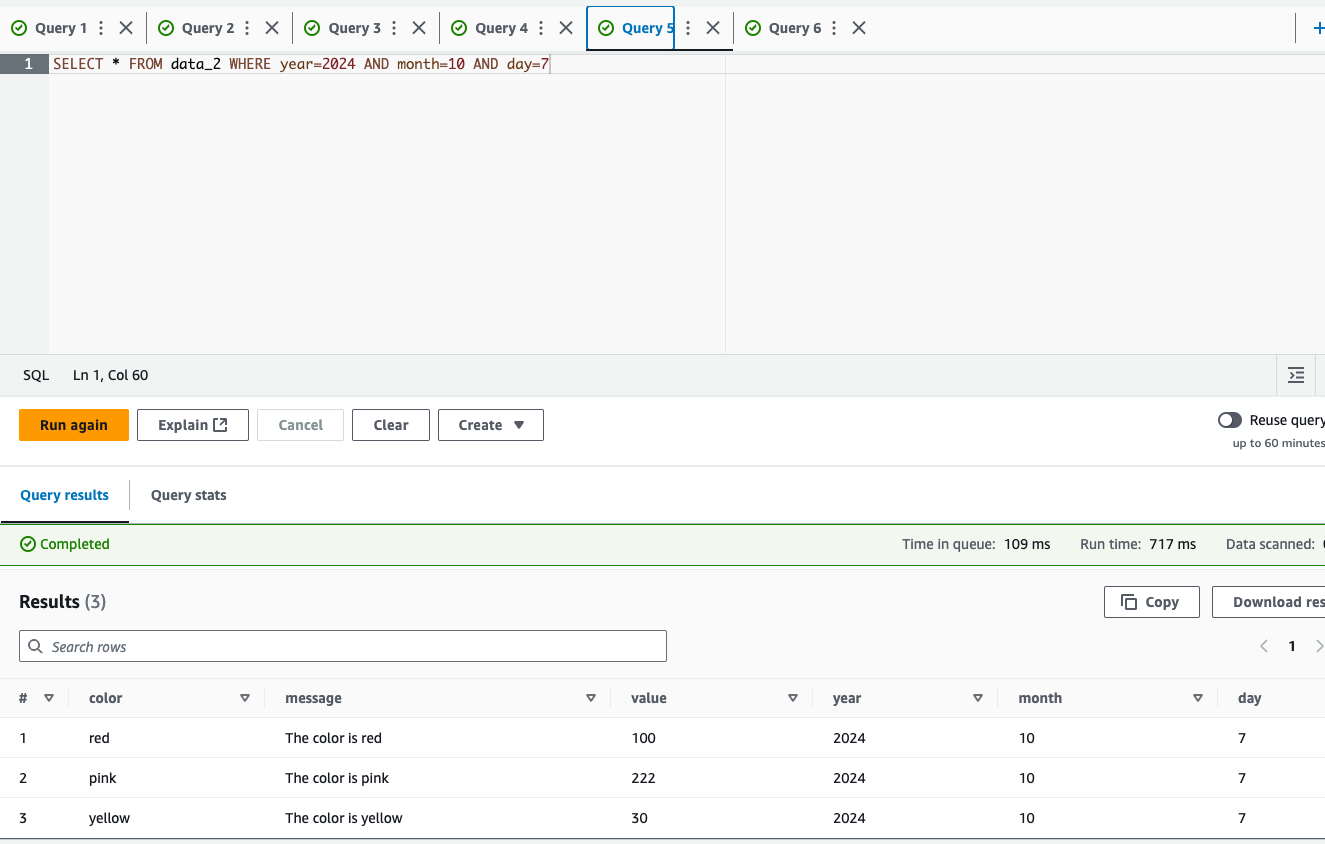

Once the query completed, we can re-run SELECT and the results should be available.

.

.

- If you add more data (in new partitions), such as

data2.txt.gzins3://athena-exampledata-20241007/2/2024/10/7, you will need to re-run theALTER TABLEcommand to make the new data visible to Athena.

As you can see, handling data partitioning manually and querying with Athena is fairly straightforward but can be error-prone due to the manual process of updating metadata. If your data is only queried using Athena (and you don’t need to expose it to third-party tools or services, making the AWS Glue Data Catalog optional), we will explore an alternative approach to managing partitions automatically.

Partition projection

An alternative to using AWS Glue Crawler or manually managing partitions is partition projection. This mechanism can significantly speed up query processing and automate partition management.

With partition projection enabled, Athena computes partition values and locations in memory based on the table definition from the Glue Catalog. It projects the partition values and their corresponding locations according to a defined pattern. Since most of these operations are performed in memory, this approach reduces both the time needed to execute queries and the overhead of updating partition metadata.

Partition projection can be enabled on both, Hive compatible and classic partitions. This is being done by defining extra table properties in format:

'projection.column_name1.type'='type',

'projection.column_name1.range'='value_range',

'projection.column_name2.type'='type',

'projection.column_name2.range'='value_range'

'storage.location.template'='s3://your-data-bucket/key1=${column_name1}/key2=${column_name3}'

There might be several column used for projection mechanism. Columns can be any of the supported types.

Follow the steps to test how it works in real example.

- Go to S3 console and create following structure:

s://your-bucket/3/year=2024/month=10/day=7and uploaddata1.txt.gzfile there. - Create the table that reflects the schema of our data with projections enabled for year, month and day:

CREATE EXTERNAL TABLE IF NOT EXISTS data_3 (

color string,

message string,

value integer

)

PARTITIONED BY (year integer, month integer, day integer)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION 's3://athena-exampledata-20241007/3/'

TBLPROPERTIES (

'projection.year.type'='integer',

'projection.year.range'='2023,2025',

'projection.month.type'='integer',

'projection.month.range'='1,12',

'projection.day.type'='integer',

'projection.day.range'='1,31',

'projection.enabled'='true',

'storage.location.template'='s3://athena-exampledata-20241007/3/year=${year}/month=${month}/day=${day}'

);

- Run the query to list the data we uploaded.

SELECT * FROM data_3 WHERE year=2024 AND month=10 AND day=7

As you can see, this time Athena returned the results without refreshing/updating Glue data catalog. That works the same for non-Hive compatible schema. The difference would be only location template, which should match the patter we use to store the data (e.g. s3://athena-exampledata-20241007/4/${year}/${month}/${day}).

Summary

This is all about Athena. I hope you found it interesting and discovered some of the useful features that Athena offers for various ad hoc use cases, such as analyzing logs or other stored data.